5.5.4. Dealing with fragility: Optimal Information Size (OIS)

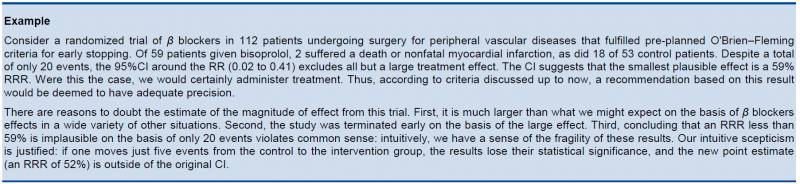

The clinical decision threshold criterion is not completely sufficient to deal with issues of precision. The reason is that confidence intervals may appear narrow, but small numbers of events may render the results fragile.

The reasoning above suggests the need for, in addition to CIs, another criterion for adequate precision. GRADE suggests the following: if the total number of patients included in a systematic review is less than the number of patients generated by a conventional sample size calculation for a single adequately powered trial, consider the rating down for imprecision. Authors have referred to this threshold as the “optimal information size” (OIS). Many online calculators for sample size calculation are available, you can find one simple one at http://www.stat.ubc.ca/∼rollin/stats/ssize/b2.html.

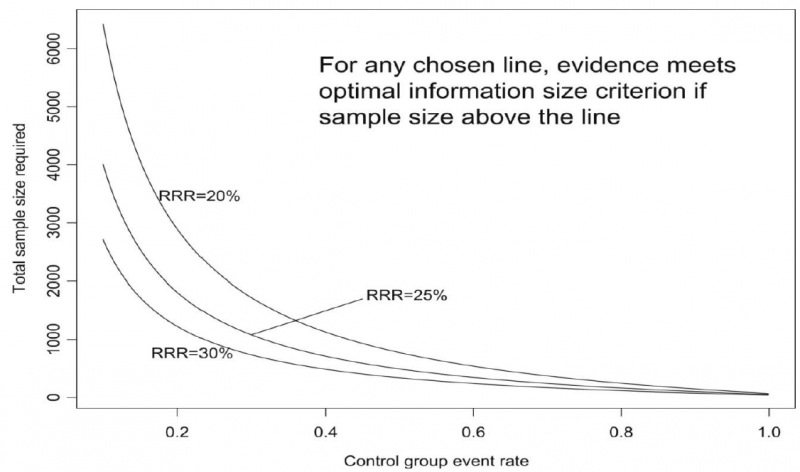

As an alternative to calculating the OIS, review and guideline authors can also consult a figure to determine the OIS. The figure presents the required sample size (assuming α of 0.05, and β of 0.2) for RRR of 20%, 25%, and 30% across varying control event rates. For example, if the best estimate of control event rate was 0.2 and one specifies an RRR of 25%, the OIS is approximately 2 000 patients.

The choice of RRR is a matter of judgment. The GRADE handbook suggested using RRRs of 20% to 30% for calculating the OIS, but there may be instances in which compelling prior information would suggest choosing a larger value for the RRR for the OIS calculation.

Beware, however, not to base your sample size on the RRR of minimally clinical importance, a practice that is suitable for sample size calculations when you set up studies, but not for judging fragility, because it leads to paradoxes: if expected effects are considerably larger than what is clinically important because the clinically important effect is small (e.g. a small effect on mortality in children would be considered important), you would risk to downgrade without good reasons because the required sample size would be too large. Note that the OIS helps judging the stability of the CIs, and not if the study was large enough to detect a difference.

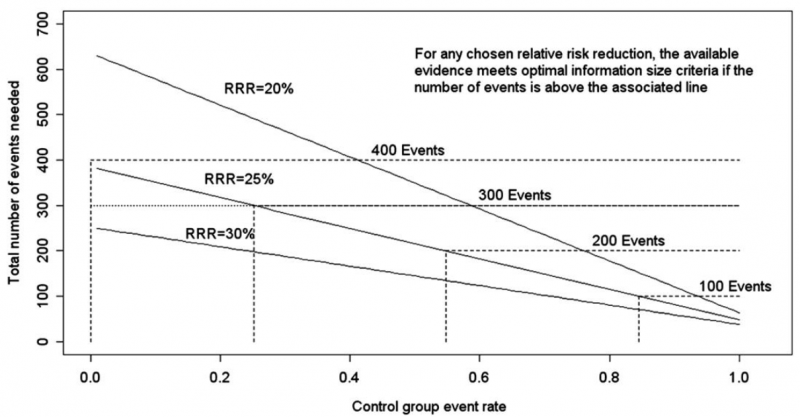

Power is, however, more closely related to number of events than to sample size. The figure presents the same relationships using total number of events across all studies in both treatment and control groups instead of total number of patients. Using the same choices as in the prior paragraph (control event rate 0.2 and RRR 25%), one requires approximately 325 events to meet OIS criteria.

Calculating the OIS for continuous variables requires specifying:

- probability of detecting a false effect – type I error (α; usually 0.05)

- probability of detecting a true effect – power (usually 80% [power = 1 – type II error; β; usually 0.20])

- realistic difference in means (Δ)

- appropriate standard deviation (SD) from one of the relevant studies (we suggest the median of the available trials or the rate from a dominating trial, if it exists).

For continuous variables we should downgrade when total population size is less than 400 (a threshold rule-of-thumb value; using the usual α and β, and an effect size of 0.2 SD, representing a small effect). In general an number of events of more then 400 guarantees the stability of a confidence interval.