KCE Process Book

KCE Webmaster Mon, 11/15/2021 - 21:50

INTRODUCTION

KCE Webmaster Mon, 11/15/2021 - 21:52

About Good Clinical Practice (GCP)

KCE Webmaster Mon, 11/15/2021 - 21:54Analysis of clinical practices and development of recommendations of good practice Good Practice recommendations include the most up-to-date medical knowledge. They provide a tool for physicians during diagnosis and treatment.

They are drawn up according to highly codified principles, based on scientific information regularly updated from the international literature. KCE analyses clinical practices in current use on the basis of existing recommendations. Recommendations must be regularly adapted in the light of constantly changing evidence.

The KCE generally works with Belgian and international scientific associations for these studies.

About Health Services research (HSR)

KCE Webmaster Mon, 11/15/2021 - 21:56In this field of research, the KCE tries to answer the following questions:

- What is the best way to organise and finance healthcare in Belgium?

- How do we keep healthcare accessible and affordable?

- Does everyone have equal access to healthcare?

- What are the objectives of our healthcare system? Are these objectives achieved?

HSR projects cover various scientific disciplines: medicine, economics, sociology, biostatistics, psychology. They require a wide range of investigation strategies, executed by a multidisciplinary research team.

Through HSR, the KCE hopes to hand elements based upon scientifically research that can be used to optimise the management and organisation of the healthcare sector in Belgium. Healthcare must be of the highest quality and be accessible to the largest number of people at an affordable price.

About Health Technology Assessment (HTA)

KCE Webmaster Mon, 11/15/2021 - 21:57The market is flooded with generally expensive technologies, treatments and drugs that are often presented as innovative and promising. The aim of the HTA studies is to assess the effectiveness and the safety of these technologies, treatments and drugs. The researchers also attempt to measure the cost effectiveness of the treatments or technologies studied: is the health benefit in proportion to the cost? Does the technology or treatment offer an affordable added value?

KCE made HTA projects one of the foundations of the KCE’s mission. Not because they are a means of justifying savings in the health sector, or as a barrier to the introduction of new technologies, but because they can be used to support the public authorities in their desire to offer added value to patients and to use the available resources as efficiently as possible.

Innovation does not necessarily mean higher healthcare costs. Innovation means offering better and less expensive products. Through its HTA studies, the KCE contributes to optimal allocation of the limited resources available.

METHODOLOGICAL APPROACHES

KCE Webmaster Tue, 11/16/2021 - 17:41

SEARCH FOR EVIDENCE (GCP - HTA)

This chapter describes the methods of a literature review for the KCE. It provides guidance for reviewers on the various steps of the search, appraisal and presentation of the results.

New evidence may change some of the recommendations made, thereby researchers should consider this as a ‘living document’ for which yearly updates will be required.

This document is mainly based on the following sources of information:

- KCE Process Documents and Notes (KCE and Deloitte, 2003)

- The Cochrane Collaboration Handbook (Higgins and Green, 2011)

- SIGN 50 (SIGN, 2008)

- CRD’s guidance for undertaking reviews in health care (Centre for Reviews and Dissemination (CRD), 2009)

- The QUOROM statement (Moher et al., 1999)

- GRADE (Grade org)

- The KCE Process Notes GCP (Van den Bruel et al., 2007), HSR (Van de Voorde and Léonard, 2007), HTA (Cleemput et al., 2007).

An evidence report consists of the following steps:

1. Introduction

A protocol for carrying out a review is equivalent to, and as important as, a protocol for a primary research study. A review is less likely to be biased if the questions are well developed beforehand, and the methods that will be used to answer them are decided on before gathering the necessary data and drawing inferences. In the absence of a protocol, it is possible that study selection and analysis will be unduly driven by (a presumption of) the findings.

A search strategy consists of several aspects. The research question (in a structured format, see Building a search question) should be used as a guide to direct the search strategy. For electronic searches, it is important to list the databases in which studies will be sought. Other sources can be consulted in order to identify all relevant studies. These include reference lists from relevant primary and review articles, journals, grey literature and conference proceedings, research registers, researchers and manufacturers, and the internet.

In practice, it is uncommon for a single search to cover all the questions being addressed within a review. Different questions may be best answered by different databases, or may rely on different study types. Authors are encouraged to take an iterative approach to the search, carrying out a search for high-level evidence first. After evaluating the results of this first search, the questions may need to be redefined and subsequent searches may need to be focused on more appropriate sources and study types.

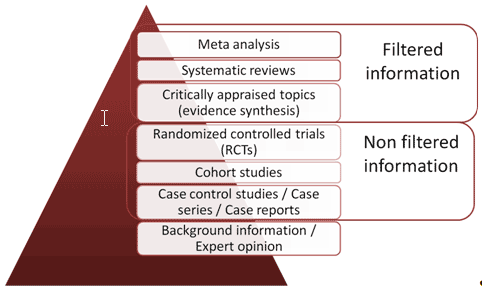

In some cases, directly relevant good-quality evidence syntheses (secondary sources), such as good-quality systematic reviews or Health Technology Assessments (HTA), will be available on some of the issues that fall within the remit of the review. In these circumstances reference will be made to the existing evidence rather than repeating work that already has been done. All HTA reports or systematic reviews that are identified must be evaluated on their quality and must be shown to have followed an acceptable methodology before they can be considered for use in this way.

In other cases existing evidence may not be directly relevant or may be found to have methodological weaknesses. In these cases, existing evidence cannot be used in the review. Nevertheless, excluded systematic reviews or HTA reports still can be a useful source of references that might be used later on in the review.

In conclusion, literature searches for the KCE should follow an iterative approach, searching for evidence syntheses first and subsequently complementing this search by searching for original studies. Various resources are listed in the following paragraph.

2. Building a search question

Constructing an effective combination of search terms for searching electronic databases requires a structured approach. One approach involves breaking down the review question into ‘facets’. Several generic templates exist, e.g. PICOS (Population, Intervention, Comparator, Outcome and Study design), PIRT (Population, Index test, Reference test, Target disorder), SPICE, ECLIPSE, SPIDER, etc. (See Appendices).

The next stage is to identify the search terms in each ‘facet’ which best capture the subject. The group of search terms covering each facet of the review question should include a range of text words (free text to be searched in the title or abstract of studies). Text words and their variants can be identified by reading relevant reviews and primary studies identified during earlier searches or a pre-assessment of the literature. Information on the subject indexing used by databases can be found by consulting the relevant indexing manuals and by noting the manner in which key retrieved articles have been indexed by a given database.

The final search strategy will be developed by an iterative process in which groups of terms are used, perhaps in several permutations, to identify the combination of terms that seems most sensitive in identifying relevant studies. This requires skilled adaptation of search strategies based on knowledge of the subject area, the subject headings and the combination of ‘facets’ which best capture the topic.

3. Searching electronic sources

The decision on which source to use depends on the research question. The three electronic bibliographic databases generally considered being the richest sources of primary studies - MEDLINE, EMBASE, and CENTRAL - are essential in any literature review for the KCE. However, many other electronic bibliographic databases exist.

Systematic reviews can be found in the Cochrane Database for Systematic Reviews or in Medline. Search strategies have been developed to enhance the identification of these types of publications (Kastner, 2009; Montori, 2005).

HTA reports can be found in the HTA database of INAHTA or at individual agencies’ sites.

Specifically for drugs and technology reviews, data from the US Federal Drug Administration (FDA) or EMA can be helpful.

Providing an exhaustive list of all potential sources is not possible here. The KCE library catalogue provides a list of such sources.

Access to electronic resources happens through the following digital libraries:

More than 10.000 e-journals and 8700 Ebooks (IP recognition)

More than 10.000 e-journals and 8700 Ebooks (IP recognition)

Access to databases, journals and eBooks via CEBAM DLH (login required)

Access to databases, journals and eBooks via CEBAM DLH (login required)

3.1 Sources of biomedical literature

Core databases

- MEDLINE contains records from 5600 journals (39 languages) in the of biomedical field, from 1946 onwards (Access for KCE | Free access through PubMed).

- EMBASE: Records from 7600 journals (70 countries, 2000 not covered by Medline) in biomedical field, from 1974 onwards (Access for KCE).

- CENTRAL - The Cochrane Controlled Trials Register, part of the Cochrane Library: Records of randomised controlled trials and controlled clinical trials in healthcare identified through the work of the Cochrane Collaboration including large numbers of records from MEDLINE and EMBASE as well as much material not covered by these databases (Dickersin, 2002). (Access for KCE through CDLH | Free access to abstracts)

Databases for systematic reviews

- Cochrane Database of Systematic Reviews (CDSR, part of the Cochrane Library) lists the results of systematic reviews (full text) conducted by Cochrane groups, but also ongoing projects (Access for KCE through CDLH | Free access to abstracts)

- Special queries exist for Medline or Embase to limit the identified records to articles identified as Systematic reviews. See appendix.

- CRD Database of reviews of effectiveness (DARE) contains structured abstracts, including critical appraisal, of systematic reviews identified by regular searching of bibliographic databases, and handsearching of key journals. [the update of CRD DARE has ceased March 2015]

Databases for HTA reports

- The INAHTA HTA database is a bibliographical database of published HTA reports; it also lists ongoing HTA projects. Members of INAHTA are regularly invited to update their information on the HTA database, informaiton from the main HTA producers is also collected by the database maintainer. Access is free, records of the HTA database are also searchable via the Cochrane Library.

- HTA reports can also be found at individual agencies’ sites, lsit of HAT bodies can be found at network organisations such as INAHTA, HTAi.

Databases for specific topics

- Nursing: CINAHL (Cumulative Index to Nursing and Allied Health Literature), British Nursing Index (BNI) (Access for KCE through CDLH)

- Physiotherapy: PEDro (contains records of RCTs, systematic reviews and evidence-based clinical practice guidelines in physiotherapy, from 1929 onwards; most trials in the database have been rated for quality to quickly discriminate between trials that are likely to be valid and interpretable and those that are not; free access)

- Psychology and Psychiatry: PsycInfo (Access for KCE)

- More bibliographic databases are listed on the KCE library catalogue (e.g. CAM, ageing, ...)

3.2 Sources of economic literature

Core database

- NHS Economic Evaluation Database (NHS EED) contains over 7000 abstracts of quality assessed economic evaluations. The database aims to assist decision-makers by systematically identifying and describing economic evaluations, appraising their quality and highlighting their relative strengths and weaknesses. [the update of CRD NHS EED has ceased March 2015]

- Some of the search filters for Medline or Embase limit the records to articles related to Costs, Economic evaluations, Economics

Complementary databases

- EconLit:database of economics publications including peer-reviewed journal articles, working papers from leading universities, PhD dissertations, books, collective volume articles, conference proceedings, and book reviews (Access for KCE)

3.3 Sources of clinical practice guidelines

Often, specific guidelines can only be retrieved through local websites of scientific associations or government agencies. It is therefore recommended to combine a Medline search (with specific filters for guidelines) with a search of the following:

- International Guideline Library (G-I-N): database of the Guideline International Network (KCE is member of GIN and has full access to the records)

- EBMpracticenet: DUODECIM guidelines, free access in Belgium, funded by RIZIV-INAMI, translation in Dutch and French; adaptation to Belgian context ongoing

- More sources of guidelines are available on BIBKCE under Databases, Practice Guidelines or Practice Guideline, Publishers' catalogue

3.4 Sources of ongoing clinical trials

Ongoing trials may have limited use as a means of identifying studies relevant to systematic reviews, but may be important so that when a review is later updated, these studies can be assessed for possible inclusion. Several initiatives have been taken recently to register ongoing trials:

- International Clinical Trials Registry Platform (ICTRP)

- EU Clinical Trials Register

- ClinicalTrials.gov

- Current Controlled Trials

- More clinical trials register are listed on the KCE library catalogue

3.5. Sources of grey literature

More and more electronic sources describe "grey literature" (results of scientific research not published in scientific journals; e.g. reports, working papers, thesis, conference papers, ...)

Institutional repositories

- OAIster

- Base

- OpenAIRE

- EconPapers (REPEC)

- More repositories are listed on the KCE library catalogue

3.6 Building a search strategy

For each database, search terms defined in the preparation phase will be mapped to the Thesaurus terms of the database (when available). Mapping can be achieved using the built-in functionality of the search interface, or manually by looking at the indexation of previously identified pertinent articles. Attention will need to be paid to the explosion tool (sometimes selected by default linke in PubMed, sometimes not like in OVID Medline).

The most important synonyms of the Thesaurus terms identified for each facet will also be added to the search strategy as text word. Advanced functionalities of the search interfaces will be used (see below: truncation, wildcard, proximity operators).

The terms within a specific facet will be combined with the Boolean operator ‘OR’ in order to group all articles dealing with this facet. For some concepts, special queries (also called search filters) have been developed (see below). The resulting groups of articles will then be combined using the Boolean operator ‘AND’.

It is recommended to validate each search strategy by a second reviewer.

3.6.1 Search tools

Boolean and proximity operators

In the context of database searching, Boolean logic refers to the logical relationships among search terms. Classical Boolean operators are ‘AND’, ‘OR’ and ‘NOT’, which can be used in most databases. Importantly, in some databases, such as PubMed, these Booleans need to be entered in uppercase letters. Other operators, the so-called proximity operators, are ‘NEAR’, ‘NEXT’ and ‘ADJ’. A more detailed overview of Boolean and proximity operators is provided in Appendix.

Truncation & wildcards

Truncation can be used when all terms that begin with a given text string are to be found. Different databases use different characters for truncation with different functionalities. For example, in PubMed, OVID and EMBASE ‘unlimited’ truncation is represented by the asterix ‘*’, but OVID Medline also uses ‘$’.

In OVID Medline the ‘optional’ wildcard character ‘?’ can be used within or at the end of a search term to substitute for 1 or 0 characters. In contrast, in EMBASE a question mark indicates exactly one character.

A more detailed overview is provided in appendix.

3.6.2 Search limits

When the amount of resulting hits is too high to be managed within the available timeframe / resources, search limits may be applied.

First, tools related to the Thesaurus should be considered:

- Focus / Major Heading: limits to the articles that have been indexed with the term as Major Heading. This helps to reduce the amount of results (up to 40%) while keeping a good pertinence thanks to the human indexation of the full article (in case of Medline and Embase).

- Subheading: these are also added to the description of an article by the indexers, but should be used with more precaution (can render the search strategy too restrictive).

Several search interfaces provide search limits that can also be applied to narrow the search. Classical examples are date and language limits, but some databases also provide limits according to age, gender, publication type etc. Before applying search limits, the risk of a too specific (i.e. narrow) search should be considered.

3.6.3. Search filters

In systematic reviews, if time and resources allow, specificity is often sacrificed in favour of sensitivity, to maximize the yield of relevant articles. Therefore, it is not unusual to retrieve large numbers (possibly thousands) of bibliographic references for consideration for inclusion in an extensive systematic review. This means that reviewers may have to spend a lot of time scanning references to identify perhaps a limited number of relevant studies.

Search filters are available to focus the search according to the type of study that is sought, for example to focus on randomized controlled trials, diagnostic accuracy studies, prognostic studies or systematic reviews (see example in appendix). Specific search filters also exist for well-circumscribed clinical problems/populations, e.g. child health (Boluyt, 2008), palliative care (Sladek, 2007), or nephrology (Garg, 2009).

Sources of filters include:

- PubMed at the Clinical Queries screen

- InterTASC: http://www.york.ac.uk/inst/crd/intertasc/index.htm

- SIGN website: http://www.sign.ac.uk/methodology/filters.html

- HiRU: http://hiru.mcmaster.ca/hiru/

- OVID or Embase.com

During the selection of an appropriate search filter, aspects of testing and validation should play an important role. Specific appraisal tools are available to evaluate the methodological quality of search filters (Bak, 2009; Glanville, 2009).

For diagnostic studies, it is recommended not to use a search filter.

3.7 Documenting a search strategy

The search strategy for electronic databases should be described in sufficient detail to allow that

- the process could be replicated

- an explanation could be provided regarding any study not included in the final report (identified by electronic sources search or not)

The template required by KCE to describe a search strategy is provided in attachment.

All identified references must be exported, preferably in a text file to be imported in a Reference Management Software (see appendix for technical description).

Files

4. Searching supplementary sources

Checking references lists

- Authors should check the reference lists of articles obtained (including those from previously published systematic reviews) to identify relevant reports. The process of following up references from one article to another is generally an efficient means of identifying studies for possible inclusion in a review.

- Because investigators may selectively cite studies with positive results (Gotzsche 1987; Ravnskov 1992), reference lists should never be used as a sole approach to identifying reports for a review, but rather as an adjunct to other approaches.

Using related citation tools

- Several electronic sources provide a "Find related" functionality. This functionality is often based on a poorly detailed (and thus difficult to describe and reproduce) algorithm (using theseaurus terms, keywords, ...). Therefore, we recommend to list the identified supplemental references under "Related citations".

- Several electronic sources provide a "find citing articles" functionality. This functionality is often related to the quality of the references provided by the authors and thus not always exact. Therefore, we recommend to list the identified supplemental references under "Citing articles".

Other supplementary sources

- Websites

- Handsearching of journals

- Experts in the field

- Etc.

5. Searching for evidence on adverse effects

The first sources to investigate for information on adverse effects are reports from trials or other studies included in the systematic review. Excluded reports might also provide some useful information.

There are a number of specific sources of information on adverse effects of drugs, including:

- Europe: European Medicines Agency, www.ema.europa.eu

- US: Food and Drug Administration, www.fda.gov/medwatch

- UK: Medicines and Healthcare Products Regulatory Agency, www.mhra.gov.uk

- Australia: Australian Adverse Drug Reactions Bulletin, www.tga.gov.au/adr/aadrb.htm

- The Netherlands: Landelijke Registratie en Evaluatie van Bijwerkingen, www.lareb.nl

In Belgium, there is currently no public database on adverse drug events. Regulatory authorities (such as the websites of FDA and EMA) and the drug manufacturer may be able to provide some information. Information on adverse effects should also be sought from other types of studies than those considered appropriate for the systematic review (e.g. cohort and case-control studies, uncontrolled [phase I and II] trials, case series and case reports). However, all such studies and reports are subject to bias to a greater extent than randomized trials, and findings must be interpreted with caution.

6. Selecting studies

KCE Webmaster Tue, 11/16/2021 - 16:39Study selection is a multi-stage process. The process by which studies will be selected for inclusion in a review should be described in the review protocol.

6.1. Inclusion and exclusion criteria

KCE Webmaster Tue, 11/16/2021 - 17:41The final inclusion/exclusion decisions should be made after retrieving the full texts of all potentially relevant citations. Reviewers should assess the information contained in these reports to see whether the criteria have been met or not. Many of the citations initially included may be excluded at this stage.

The criteria used to select studies for inclusion in the review must be clearly stated:

6.1.1. Types of participants

KCE Webmaster Tue, 11/16/2021 - 17:41The diseases or conditions of interest should be described here, including any restrictions on diagnoses, age groups and settings. Subgroup analyses should not be listed here.

6.1.2. Type of interventions

KCE Webmaster Tue, 11/16/2021 - 17:41Experimental and control interventions should be defined here, making it clear which comparisons are of interest. Restrictions on dose, frequency, intensity or duration should be stated. Subgroup analyses should not be listed here.

6.1.3. Types of outcome measures

KCE Webmaster Tue, 11/16/2021 - 17:41Note that outcome measures do not always form part of the criteria for including studies in a review. If they do not, then this should be made clear. Outcome measures of interest should be listed in this section whether or not they form part of the inclusion criteria.

For most reviews it will be worthwhile to pilot test the inclusion criteria on a sample of articles (say ten to twelve papers, including ones that are thought to be definitely eligible, definitely not eligible and questionable). The pilot test can be used to refine and clarify the inclusion criteria, train the people who will be applying them and ensure that the criteria can be applied consistently by more than one person.

Even when explicit inclusion criteria have been specified, decisions concerning the inclusion of individual studies remain relatively subjective. There is evidence that using at least two authors has an important effect on reducing the possibility that relevant reports will be discarded (Edwards et al. 2002). Agreement between assessors may be formally assessed mathematically using Cohen's Kappa (a measure of chance-corrected agreement). Many disagreements may be simple oversights, whilst others may be matters of interpretation. These disagreements should be discussed, and where possible resolved by consensus after referring to the protocol. If disagreement is due to lack of information, the authors may have to be contacted for clarification. Any disagreements and their resolution should be recorded.

The influence of uncertainty about study selection may be investigated in a sensitivity analysis.

It is useful to construct a list of excluded studies at this point, detailing the reason for each exclusion. This list may be included in the report of the review as an appendix. The final report of the review should also include a flow chart or a table detailing the studies included and excluded from the review. In appendix a flow chart is provided for documenting study selection. If resources and time allow, the lists of included and excluded studies may be discussed with the expert panel. It may be useful to have a mixture of subject experts and methodological experts assessing inclusion.

6.1.4. Types of studies

KCE Webmaster Tue, 11/16/2021 - 17:41Eligible study designs should be stated here, along with any thresholds for inclusion based on the conduct or quality of the studies. For example, ‘All randomised controlled comparisons’ or ‘All randomised controlled trials with blind assessment of outcome’. Exclusion of particular types of randomised studies (for example, cross-over trials) should be justified.

It is generally for authors to decide which study design(s) to include in their review. Some reviews are more restrictive, and include only randomized trials, while others are less restrictive, and include other study designs as well, particularly when few randomized trials addressing the topic of the review are identified. For example, many of the reviews from the Cochrane Effective Practice and Organization of Care (EPOC) Collaborative Review Group include before-and-after studies and interrupted time series in addition to randomized and quasi-randomized trials.

6.2. Selection process

KCE Webmaster Tue, 11/16/2021 - 17:41Before any papers are acquired for evaluation, sifting of the search output is carried out to eliminate irrelevant material.

- Papers that are clearly not relevant to the key questions are eliminated based on their title.

- Abstracts of remaining papers are then examined and any that are clearly not appropriate study designs, or that fail to meet specific methodological criteria, will be also eliminated at this stage.

- All reports of studies that are identified as potentially eligible must then be assessed in full text to see whether they meet the inclusion criteria for the review.

The reproducibility of this process should be tested in the initial stages of the review, and if reproducibility is shown to be poor more explicit criteria may have to be developed to improve it.

Authors must decide whether more than one author will assess the relevance of each report. Whatever the case, the number of people assessing the relevance of each report should be stated in the Methods section of the review. Some authors may decide that assessments of relevance should be made by people who are blind or masked to the journal from which the article comes, the authors, the institution, and the magnitude and direction of the results by editing copies of the articles (Berlin 1997; Berlin, Miles, and Crigliano 1997). However, this takes much time, and may not be warranted given the resources required and the uncertain benefit in terms of protecting against bias (Berlin 1997).

7. Quality assessment of studies

Critical appraisal of articles is a crucial part of a literature search. It aims at identifying methodological weaknesses and assessing the quality in a coherent way. The methodological assessment is based on a number of key questions that focus on those aspects of the study design that have a significant influence on the validity of the results reported and conclusions drawn. These key questions differ according to the study type, and a range of checklists can be used to bring a degree of consistency to the assessment process. The checklists for systematic reviews, randomized controlled trials, cohort studies and case-control studies discussed below were selected during several internal workshops at the KCE. The other checklists (for diagnosis studies for instance) will also be discussed.

The process of critical appraisal consists of an evaluation by two independent reviewers who confront their results and discuss them with a third reviewer in case of disagreement. However, because of feasibility it could be acceptable that one reviewer does the quality appraisal and that a second reviewer checks the other’s work.

If necessary, the authors of the evaluated study should be contacted for additional information.

The results of the critical appraisal should be reported in a transparent way.

7.1. Critical appraisal of systematic reviews

From the several instruments available to assess methodological quality of reviews (1); KCE recommends the use of AMSTAR 2 (2) that takes into account RCT but also non RCT studies.

An alternative is the ROBINS-tool which is more comprehensive for non randomized studies. (3)

References

(1) See among other overviews

- Zeng X, Zhang Y, Kwong JSW, Zhang C, Li S, Sun F, et al. The methodological quality assessment tools for preclinical and clinical studies, systematic review and meta-analysis, and clinical practice guideline: a systematic review. Journal of Evidence-Based Medicine. 2015;8(1):2-10.

- Pieper D, Antoine S-L, Morfeld J-C, Mathes T, Eikermann M. Methodological approaches in conducting overviews: current state in HTA agencies. Research Synthesis Methods. 2014;5(3):187-99

(2) Shea Beverley J, Reeves Barnaby C, Wells George, Thuku Micere, Hamel Candyce, Moran Julian et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both BMJ 2017; 358 :j4008

Updates

[Update 20180126] AMSTAR 2 replaces AMSTAR in the toolbox

AMSTAR 2 aims at responding to AMSTAR's criticisms, among others the fact that AMSTAR does not cover non RCT studies.

- Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10

- Burda BU, Holmer HK, Norris SL. Limitations of A Measurement Tool to Assess Systematic Reviews (AMSTAR) and suggestions for improvement. Syst Rev. 2016;5(1):58.

[Update] Dutch Cochrane checklist removed from the toolbox

KCE experts initially selected 2 checklists for quality appraisal: AMSTAR and the Dutch Cochrane checklist. However, the Dutch Cochrane tool is not used anymore by its authors and was never formally validated. It has thus been removed from the toolbox.

Files

7.2. Critical appraisal of randomized controlled trials for interventions

For the quality appraisal of randomized controlled trials for interventions, the Cochrane Collaboration’s Risk of Bias Tool is recommended [1].

This checklist contains hints on how to interpret and score the individual items, and is summarised in the attachement "Cochrane Collaboration's Risk of Bias Tool". It is also extensively explained in chapter 8 of the Cochrane Handbook (https://training.cochrane.org/handbook). Each item can be scored with low, unclear or high risk of bias. Importantly, performance bias (blinding) and attrition bias (incomplete outcome data) should be assessed for each critical and important outcome as selected according to GRADE. If insufficient detail is reported of what happened in the study, the judgement will usually be unclear risk of bias.

The recommended level at which to summarize the risk of bias in a study is for an outcome within a study, because some risks of bias may be different for different outcomes. A summary assessment of the risk of bias for an outcome should include all of the entries relevant to that outcome: i.e. both study-level entries, such as allocation sequence concealment, and outcome specific entries, such as blinding.

Some methodological issues, such as the correctness of the statistical analysis, power, etc. are not specifically addressed in this tool, and should be assessed separately.

The scores can be filled in using the template in attachment.

[1] KCE experts initially selected 2 checklists for quality appraisal: the Risk of Bias Tool and the Dutch Cochrane checklist. However, the Dutch Cochrane tool is not used anymore by its authors and was never formally validated.

Files

7.3. Critical appraisal of diagnostic accuracy studies

For the quality appraisal of diagnostic accuracy studies, the QUADAS 2 instrument is recommended (Whiting, 2003). The tool is structured so that 4 key domains are each rated in terms of the risk of bias and the concern regarding applicability to the research question. Each key domain has a set of signalling questions to help reach the judgments regarding bias and applicability. A background document on QUADAS 2 can be found on the website: http://www.bris.ac.uk/quadas/quadas-2.

In order to correctly appraise a diagnostic accuracy study, basic knowledge about key concepts is essential. An overview of these concepts is provided in the following table:

Three phases can be distinguished in the QUADAS tool:

- Phase 1: State the review question using the PIRT format (Patients, Index test(s), Reference standard, Target condition)

- Phase 2: Draw a flow diagram for the primary study, showing the process of recruiting, inclusion, exclusion and verification

- Phase 3: Risk of bias and applicability judgments.

The score can be filled in using the template in attachment.

Files

7.4. Critical appraisal of observational studies

Unlike systematic reviews, randomized controlled trials, diagnostic studies and guidelines, the methodological research community has less agreement on which items to use for the quality appraisal of cohort studies, case-control studies and other types of observational evidence. The Dutch Cochrane Centre has a few checklists available (http://dcc.cochrane.org/beoordelingsformulieren-en-andere-downloads), but these are written in Dutch and were not formally validated. For the evaluation of prospective, non-randomized, controlled trials, the Cochrane Collaboration’s Risk of Bias Tool can be used. Other checklists can be found at: http://www.unisa.edu.au/Research/Sansom-Institute-for-Health-Research/Research-at-the-Sansom/Research-Concentrations/Allied-Health-Evidence/Resources/CAT/. GRADE also offers a number of criteria that can be used to judge the methodological quality of observational studies. These are further explained in the chapter on GRADE.

Mainly based on the checklists of SIGN and NICE, the KCE elaborated two new checklists for cohort studies and case-control studies (see attachment).

Files

7.5. Critical appraisal of guidelines

For the quality appraisal of clinical practice guidelines, the AGREE II instrument (www.agreetrust.org) is recommended. AGREE II comprises 23 items organized into 6 quality domains: i) scope and purpose; ii) stakeholder involvement; iii) rigour of development; iv) clarity of presentation; v) applicability; and vi) editorial independence. Each of the 23 items targets various aspects of practice guideline quality and can be scored on a scale from 1 (strongly disagree) to 7 (strongly agree). Two global rating items allow an overall assessment of the guideline’s quality. Detailed scoring information is provided in the instrument in attachment.

Ideally, the quality appraisal of a guideline is done by 4 reviewers, but because of feasibility 2 reviewers can be considered acceptable.

AGREE II serves 3 purposes:

1. to assess the quality of guidelines;

2. to provide a methodological strategy for the development of guidelines; and

3. to inform what information and how information ought to be reported in guidelines.

Files

8. Data extraction

Data extraction implies the process of extracting the information from the selected studies that will be ultimately reported. In order to allow an efficient data extraction, the process should be detailed in the protocol before the literature search is started. Key components of the data extraction include:

- information about study reference(s) and author(s);

- verification of study eligibility;

- study characteristics:

- study methods

- participants

- interventions

- outcomes measures and results

Evidence tables

All validated studies identified from the systematic literature review relating to each key search question are summarized into evidence tables. The content of the evidence tables is determined by the entire project group. Completion for all retained articles is done by one member of the project group and checked by another member. A KCE template for evidence tables was developed using the CoCanCPG evidence tables (www.cocancpg.eu/) and the GIN evidence tables (http://g-i-n.net/activities/etwg/progresses-of-the-etwg) as a basis, and can be found in attachment. A template is available for systematic reviews, intervention studies, diagnostic accuracy studies and prognostic studies.

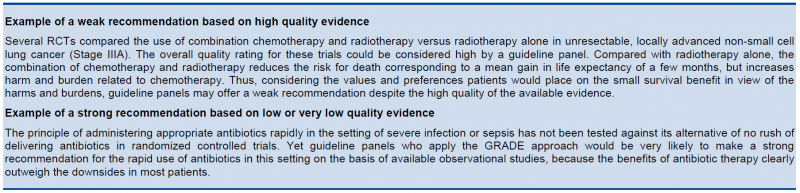

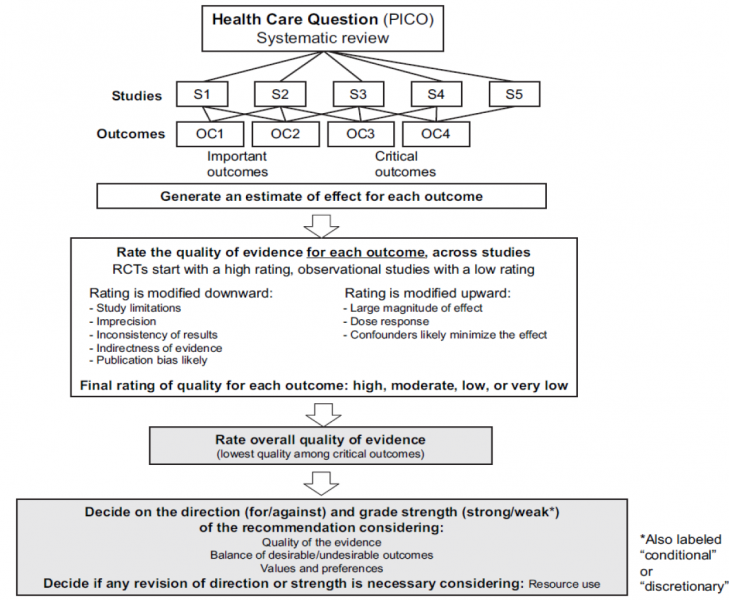

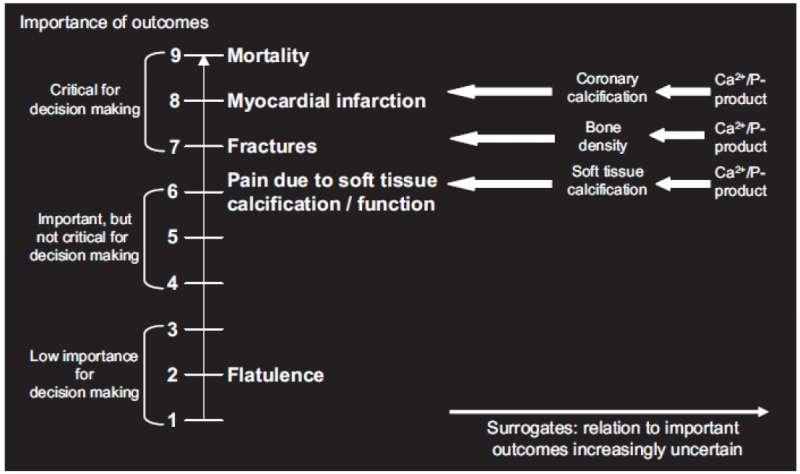

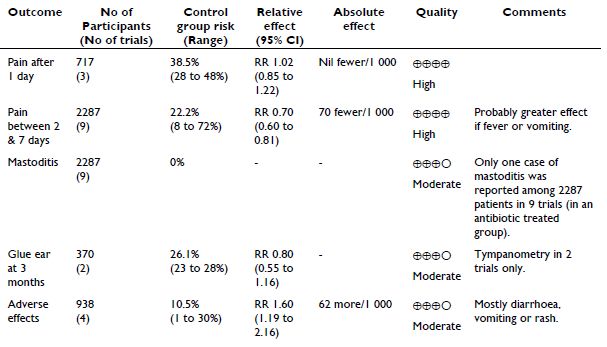

GRADE evidence profiles

To provide an overview of the body of evidence for each comparison relevant to the research question, GRADE evidence profiles are created and added to the appendix of the report. These evidence profiles can serve as a basis for the content discussions during the expert meetings. To create these evidence profiles it is highly recommended to use the GRADEpro software, which can be downloaded for free (http://ims.cochrane.org/revman/other-resources/gradepro/download).

When a meta-analysis is possible, it is recommended to extract the necessary information to Review Manager (RevMan) first, and subsequently to import this information from RevMan into GRADEpro (using the button ‘Import from RevMan’). More information on the use of RevMan can be found here: http://ims.cochrane.org/revman.

Once all information is extracted in GRADEpro, evidence profiles can be created by clicking the ‘Preview SoF table’ button, selecting the format ‘GRADE evidence profile’ and exporting them to a Word Document.

9. Analysing and interpreting results

KCE Webmaster Tue, 11/16/2021 - 16:39Once the eligible studies are selected and quality appraised, the magnitude of the intervention effect should be estimated. The best way to do this is by performing a meta-analysis (i.e. the statistical combination of results from two or more separate studies), although this is not always feasible. An interesting tool for doing a limited meta-analysis is the free Review Manager software of the Cochrane Collaboration.

The starting point of the analysis and interpretation of the study results involves the identification of the data type for the outcome measurements. Five different types of outcome data can be considered:

- dichotomous data: two possible categorical response;

- continuous data

- ordinal data: several ordered categories;

- counts and rates calculated from counting the numbers of events that each individual experiences;

- time-to-event data

Only dichotomous data will be addressed here. Dichotomous outcome data arise when the outcome for every study participant is one of two possibilities, for example, dead or alive. These data can be summarised in a 2x2 table:

| Outcome | |||

| YES | NO | ||

| Intervention | a | b | a + b |

| Control | c | d | c + d |

| a + c | b + d |

The most commonly encountered effect measures used in clinical trials with dichotomous data are:

- Relative risk (RR): the ratio of the risk (i.e. the probability with which the outcome will occur) of the outcome in the two groups, or [a/(a+b)]/[c/(c+d)]. For example, a RR of 3 implies that the outcome with treatment is three times more likely to occur than without treatment;

- Absolute risk reduction (ARR): the absolute difference of the risk of the outcome in the two groups, or [a/(a+b)]-[c/(c+d)];

- Number needed to treat (NNT): the number of persons that need to be treated with the intervention in order to prevent one additional outcome, or 1/ARR.

- For diagnostic accuracy studies, the results will be expressed as

- Sensitivity: the proportion of true positives correctly identified by the test: Sens=a/a+c

- Specificity: the proportion of true negatives correctly identified by the test: Spec=d/b+d

- Positive predictive value: the proportion of patients with a positive test result correctly diagnosed: PPV=a/a+b

- Negative predictive value: the proportion of patients with a negative test result correctly diagnosed: NPV=d/c+d

- Likelihood ratio: likelihood that a given test result would be expected in a patient with the target disorder compared to the likelihood that that same result would be expected in a patient without the target disorder LR+=(a/a+c)/(b/b+d); LR-=(c/a+c)/(d/b+d)

- Diagnostic odds ratio: ratio of the odds of having a positive index test result in a patient with the target condition over the odds of having this test result in a patient without the target condition: OR=ad/bc

| Target condition Positive | Target condition Negative | |

| Index test positive | a | b |

| Index test negative | c | d |

As discussed above, other types than dichotomous data are possible, each with their own outcome measures and statistics. It is beyond the scope of this document to describe and discuss all these types. Interested readers are referred to textbooks such as Practical statistics for medical research (Altman 1991) Modern Epidemiology (Rothman and Greenland 1998) and Clinical epidemiology : a basic science for clinical medicine (Sackett 1991) .

10. Reporting of the literature review

KCE Webmaster Tue, 11/16/2021 - 17:41A literature search should be reproducible and therefore explicitly documented. The report of a literature search should contain the following items:

1. Description of the search methodology:

a. Search protocol

i. Search question

ii. Searched databases

iii. Search terms, their combinations and the restrictions used (e.g. language, date)

iv. In- and exclusion criteria for the selection of the studies

b. Quality appraisal methodology

c. Data extraction methodology

2. Description of the search results:

a. Number of retrieved articles, in- and excluded studies, and reasons for exclusion; use of flow chart

b. Results of quality appraisal

c. Evidence tables for each search question

LITERATURE REVIEW AND INTERNATIONAL COMPARISON (HSR)

KCE Webmaster Tue, 11/16/2021 - 17:41See the attached document

Table of content

- Introduction

- Literature review

- Introduction

- Literature review

- Meta review: reveiw of review

- International comparison

- Rationales for international comparisons in HSR research: does an international comparison serve your problem?

- Adapt the “set-up” of the international comparison to theproblem you want to address

DATA - COLLECTION AND ANALYSIS

KCE Webmaster Tue, 11/16/2021 - 17:41<This chapter will be published in September 2013>

QUALITATIVE DATA (GCP - HSR - HTA)

Although there is no unified definition of qualitative research, most authors agree about its main characteristics. Creswell formulated it like this: “Writers agree that one undertakes qualitative research in a natural setting where the researcher is an instrument of data collection who gathers words or pictures, analyzes them inductively, focuses on the meaning of participants, and describes a process that is expressive and persuasive in language” (Creswell, 1998). The gathering of qualitative data takes many forms, but interviewing and observing are among the most frequently used, no matter the theoretical tradition of the researcher.

1. How to chose a qualitative method?

We so far identified 4 types of QRM suitable for the KCE research projects useful to describe in a first report: interviewing (individually or in focus groups), observing and structuring discussions among experts with a Delphi survey. Others should be developed in the future.

Before entering in the practical aspect of each method, we will briefly describe them in order to give some guidance to choose the most appropriate one.

- Semi-structured individual interview aims at searching for data through questioning the respondent using conversational techniques, “…being shaped partly by the interviewer’s pre-existing topic guide and partly by concerns that are emergent in the interview.” (Bloor and Wood, 2006, p. 104). “It gives the opportunity to the respondents to tell their own stories in they own words” (Bowling, 1997, p. 336). The use of such a method in the KCE context is appropriate when the aim is to identify different point of views, beliefs, attitudes, experience of people such patients, practitioners, stakeholders, etc. when no interaction between the respondents is required or appropriate (according to the topic for example). It could also be chosen because of practical reasons, e.g. when participants are not easily ‘displaceable’, or lack time.

- Focus groups is a form of semi-structured interview. It consists on “a series of group discussions held with differently composed groups of individuals and facilitated by a researcher, were the aim is to provide data (via the capture of intra-group interaction) on groups beliefs and group norms in respect of a particular topic or set of issues” (Bloor and Wood, 2006, p. 88). This is useful “where we need interactivity to enhance brainstorming among the participants, gain insights and generate ideas in order to pursue a topic in greater depth” (Bowling, 1997, p 352). Focus groups ‘”worked well and provide the richest data in relation to public’s view of priorities for health services and (…) were less inhibiting for respondent that one-to-one interviews (Bowling, 1997, p. 354).

- Observation is useful to understand more than people say about (complex) situations (Bowling, 1997). In the KCE context, it will be useful for site visits, when preparing a report on a hospital or a health service, a procedure, etc.

- The Delphi survey aims to achieve consensus or define positions among experts panelists, through iterations of anonymous opinions and of proposed compromise statements from the group moderator (Bloor and Wood, 2006). For KCE reports, this method could be useful for setting priorities, clarify acceptability of a new technology or system or innovations.

2. How to set up?

Following the discussions we have heard in the different focus groups, not every KCE researcher expressed the need to use or understand QRM. Nevertheless, for those interested in QRM, we try to respond to the different researchers’ needs through the notes that will be published in the KCE process book.

[1] For further reading: Silverman (2011)

Why opt for a qualitative approach?

“The goal of qualitative research is the development of concepts which help us to understand social phenomena in natural (rather than experimental) settings, giving due emphasis to the meanings, experiences, and views of all the participants” (Mays, 1995,p. 43). This quotation gives a nice summary of the specificities of qualitative research methods, which are discussed below.

A. Specificities of qualitative research methods

First, qualitative research encompasses all forms of field research performed with qualitative data. “Qualitative” refers to data in nonnumeric form, such as words and narratives. There are different sources for qualitative data, such as observations, document analysis, interviews, pictures or video’s, etc. Each of these data-gathering techniques has its particular strengths and weaknesses that have to be reflected upon when choosing for a qualitative research technique. In the social sciences, the use of qualitative data is also closely related to different paradigms trying to develop insight in social reality. Elaboration on these paradigms is however outside the scope of this process note [1].

Second, the aim of qualitative research is developing a “thick description[2]” and “grounded or in-depth understanding” of the focus of inquiry. The benefits of well developed qualitative data-collection are precisely richness of data and deeper insight into the problem studied. They do not only target to describe but help also to get more meaningful explanations on a phenomenon. They are also useful in generating hypotheses (Sofaer, 1999). Types of research questions typically answered by qualitative research are “What is going on? What are the dimensions of the concept? What variations exist? Why is this happening?” (Huston,1998). Qualitative research techniques are primarily used to trace “meanings that people give to social phenomena” and “interaction processes”, including the interpretation of these interactions (Pope, 1995). “They allow people to speak in their own voice, rather than conforming to categories and terms imposed on them by others.” (Sofaer, 1999, p. 1105). This kind of research is also appropriate to investigate social phenomena related to health(Huston,1998).

Third, one of the key strengths of qualitative research is that it studies people in their natural settings rather than in artificial or experimental ones. Since health related experiences and beliefs are closely linked to daily life situations it is less meaningful to research them in an artificial context such as an experiment. Therefore data is collected by interacting with people in their own language and observing them in their own territory (Kirk, 1986) or a place of their own choice. This is also referred to as naturalism. Therefore the term naturalistic methods is sometimes used to denote some, but not all, qualitative research(Pope, 2006). Also this characteristic is not always relevant to the use of QRM at the KCE. For example focus group interviews are usually not performed in the natural setting of the participants, but rather in the setting of a meeting room.

A fourth feature of qualitative research in health care is that it often employs several different qualitative methods to answer one and the same research question (Pope, 2006). This relates partly to what is called triangulation (see here).

Finally, qualitative research is always iterative starting with assumptions, hypotheses, mind sets or general theories which change and develop throughout the successive steps of the research process. It is desirable to make these initial assumptions explicit at the beginning of the process and document the acquired new insights or knowledge at each step.

[1] For those interested we refer to Denzin and Lincoln, 2008 a, Denzin and Lincoln, 2008 b, Bourgeault et al., 2012 or in Dutch, Mortelmans, 2009

[2] A “thick description” of a human practice or behavior include not only the focus of the study, but its context as well, such it becomes meaningful to an outsider. The term was introduced in the social science literature by the anthropologist C. Geertz in his essay in 1973

B. Qualitative versus quantitative approaches

Laurence.Kohn Tue, 11/16/2021 - 17:41Although it is meaningful to do qualitative research in itself, qualitative research is often defined by reference to quantitative research. Often it is assumed that because qualitative research does not seek to quantify or enumerate, it does not ‘measure’. Qualitative research generally deals with words or discourses rather than numbers, and measurement in qualitative research is usually concerned with taxonomies or classifications. “Qualitative research answers questions such as, ‘what is X, and how does X vary in different circumstances, and why’, rather than ‘how big is X or how many X’s are there?”(Pope, 2006, p3).

By emphasizing the differences the qualitative and quantitative approach are presented as opposites. However, qualitative and quantitative approaches are complementary and are often integrated in one and the same research project. For example in mixed methods research the strengths of quantitative and qualitative research are combined for the purpose of obtaining a richer and deeper understanding (Zang, 2012). Also qualitative data could be analyzed in a quantitative way by for example counting the occurrence of certain words.

Often health services researchers draw on multiple sources of data and multiple strategies of inquiry in order to explore the complex processes, structures and outcomes of health care. It is common that quantitative and qualitative methods answer different questions to provide a well-integrated picture of the situation under study(Patton, 1999). Especially in the field of health services research qualitative and quantitative methods are increasingly being used together in mixed method approaches. The ways QRMs could be used combined or not, are:

Qualitative research only:

- To know the variation in experiences related to health or illness.

- To build typologies regarding health services use, patient attitudes, health beliefs, etc.

- Qualitative preliminarly to quantitative:

- To explore new area, new concepts, new behaviour, etc.(Pope, 1995) before to start with measurement.

- To build quantitative data collection tools (questionnaires): using appropriate wording(Pope, 1995), variables to submit, to develop reliable and valid survey instruments(Sofaer, 1999), etc.

- To pre-test survey instruments(Sofaer, 1999).

- In supplement to quantitative work:

- As a part of a triangulation process that consist in confronting results coming from several data sources(Pope, 1995).

- To reach a different level of knowledge(Pope, 1995): “If we focus research only on what we already know how to quantify, indeed only on that which can ultimately be reliably quantified, we risk ignoring factors that are more significant in explaining important realities and relationships.” (Sofaer, 1999, p. 1102).

- In complement to quantitative work by exploring complex phenomena or areas that are not reachable with quantitative approaches(Pope, 1995).

- Sofaer(Sofaer, 1999) provides us the insight that in many cases, inquiry can move from being unstructured, largely qualitative in nature, to being structured and largely quantitative in nature. This is how she describes the continuum: “(…) there is uncertainty not only about answers, but about what the right questions might be; about how they should be framed to get meaningful answers; and about where and to whom questions should be addressed. As understanding increases, some of the right questions emerge, but uncertainty remains about whether all of the right questions have been identified. Further along, confidence grows that almost all of the important questions have been identified and perhaps framed in more specific terms, but uncertainty still exists about the range of possible answers to those questions. Eventually, a high level of certainty is reached about the range of almost all of the possible answers.” (p. 1103).

- In sum, over time investigations related to a certain area, start with qualitative research to explore the field, find the right questions, prepare for more focused questions and discover theories and hypotheses. Next, quantitative research is in place to test hypotheses and finally, qualitative research can be used to deepen the findings or to search for explanations quantitative research techniques cannot provide.

3. How to collect?

<This chapter will be published in December 2013>

3.1 Interviewing (individuals, groups)

There are many ways to interview people, e.g. individually or in focus groups. However, they share some general principles and techniques. Therefore in what follows we address the general principles. After that we present a chapter on individual semi-structured interviews and a chapter on focus groups.

3.1.1 General principles

3.1.1.1 How to plan the research design?

As with any data collection, interviewing (individually or in focus groups) has to be planned within the overall research approach taking into account the particular aims of the qualitative data collection.

The planning of data collection has to be prepared early in the process of the overall research. Qualitative research is time consuming, on the level of data-collection, data-analysis and reporting. All the steps are presented in the next figure.

Figure 2 – Flowchart: interviewing people

3.1.1.2 Sampling issues in qualitative research: who and how many?

Selection of participants

In qualitative research we select people who are likely to provide the most relevant information (Huston 1998). In order to design the sample and cover all variability around the research issue, the researchers must have an idea about the different perspectives that should be represented in the sample. This is called “field mapping” of the key players who have a certain interest in the problem under study. The role of this explicit “field mapping” is often underestimated but essential in order to build a purposive sample. It is possible that this “field map” evolves during the data collection. The notion of “representativeness” here is not understood in the statistical way. The idea of representation is seen as a “representation of perspectives, meanings, opinions and ideas” of different stakeholders in relation to the problem researched and their interest. In order to select the participants for interviews or focus groups, one should ask “do we expect that this person can talk about (represent) the perspectives (meanings given to the situation) of this stakeholder group”. The aim is to maximize the opportunity of producing enough data to answer the research question (Green 2004).

Ideally there should be a mixture of different “population characteristics” to ensure that arguments and ideas of the participants represent the opinions and attitudes of the relevant population. Also the unit of analysis should be taken into account. This could be for example “individuals for their personal opinions/experience/expertise” or “individuals because they represent organizational perspectives”.

Moreover in order to make comparisons within and between types of participants, the sample design should take this already into account. In Table 9, two criteria for comparison, for example age and socio-economic status, are already included to allow comparative analysis between age or status groups.

Sampling approaches

There is a wide range of sampling approaches (e.g. Miles and Huberman 1994, Patton 2002, Strauss and Corbin 2008). It is not uncommon in qualitative research that the research team continues to make sampling decisions during the process of collecting and analysing data. However, a clear documentation of the sampling criteria is needed when doing qualitative research. These criteria should cover all relevant aspects of the research topic. The researcher should identify the central criteria and translate them in observable sample criteria. In addition, the chosen criteria should leave enough variation to explore the research topic (Mortelmans, 2009). For example, in a research about factors influencing the decision to have or refrain from having a refractive eye surgery in the two last years, sampling criteria were:

- To have experienced or to have considered a refractive surgery. We want to explore both the pro and cons.

- To be older than 20 and younger than 70. Refractive eye surgery is not an option for those younger than 20 or older than 70.

In what follows we describe a number of sampling strategies. All the sampling strategies are non-probabilistic. A randomized sample is not useful in qualitative research, since generalizability to the general population is not the aim. Moreover with a random sample the researcher would run the risk of selecting people who have no link with the research subject and thus nothing to tell about it (Mortelmans, 2009). In purposive sampling the point of departure are the sampling criteria as described above. There are different forms of purposive sampling:

- Stratified purposive sampling (Patton, 2002):

Purposive samples can be stratified (or nested) by selecting particular persons that vary according to a key dimension/characteristic (e.g. a sample of people from large hospitals, and a different sample with people from small hospitals) and the selection ideally represents the different positions within the ‘system’ or phenomenon under investigation. The stratification criteria are the equivalent of independent variables in quantitative research. The researcher should think ahead about independent variables which could provide new information regarding the research topic. For example, in the research project on refractive eye surgery we expected that reasons to chose or refrain from chosing for refractive eye surgery vary with age, with financial resources and can be different in the Dutch- and French-speaking part of the country. Therefore we added age, socio-economic status and region as criteria introducing heterogeneity. This results in the following matrix: - Homogeneous sampling:

In the case of homogeneous sampling variation between respondents is minimised. Participants are chosen because they are alike, in order to focus on one particular process or situation they have in common (Mortelmans, 2009) . However the homogenous character does not exclude comparisons between types of participants, because for example unanticipated dimensions might emerge from the data. It is also useful to take into account hierarchy, hence not to put for example nurses and specialists working in the same hospital together in a focus group, as this might create bias in the responses.This sampling strategy is used when the goal of the research is to develop an in-depth understanding and description of a particular group with similar characteristics or people on equal foot. For example for the KCE research project on alternative medicines 48-50 only regular users were sampled.

Table 9 – Example of stratified purposive sample

Already had eye surgery or surgery planned | Considered eye surgery but refrained from having it | |||||||||||||||||

Age | 20-30 | 31-40 | >40 | 20-30 | 31-40 | >40 | ||||||||||||

Socio-economic status | a | b | c | a | b | c | a | b | c | a | b | c | a | b | c | a | b | c |

Number of respondents | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | |||||||

3.1.1.3 How to develop an interview guide?

An interview guide should be adapted to the language and vocabulary of the participant(s) and is generally built out of three components:

- A reminder of the goal of the research.

- The main topics or questions, the interviewer wants to address during the interview.

- Relaunching questions. They are an essential part of the interview. It may happen that the interviewee does not give an answer to the question or gives an unexpected answer. In that case the interviewer can probe in order to delve deeper. In case a respondent does mention an aspect you thought of in advance or you are particularly interested in, you can repose the question focused on that specific issue. For example the initial question could be: “Which difficulties you experienced after your surgery?”. The respondent mentions all kinds of worries and inconveniences, but you are particularly interested in the organization of after care. Hence you could ask: “How did you experience the organization of after care?”.

How to construct a topic list or semi-structured questionnaire?

A topic list covers all the topics the interviewer should ask during the interview. It enables the interviewer to guide the interview while allowing the discussion to flow naturally. The sequence of topics generally moves from the general to the specific. The sequencing of topics can be introduced in a flexible way, and within a general framework of topics, the focus of the discussion can be reset. A topic list is also used in preparation of the semi-structured questionnaire

In a questionnaire semi-structured questions are formulated in speaking language and are posed as such during the interview. The same questions with the same formulation, sometimes in the same sequence, are posed in each interview. The disadvantage however is that it can threaten the natural flow of the conversation.

Both for the topic list and the semi-structured questionnaire, questions/topics should evidently be selected in function of the research objectives. An open ended-formulation of the questions is important in order to enable the interviewee to talk freely without predispositions of the interviewer influencing the narrative. For example, rather than asking “Did you worry about the surgery?”, one could ask “How did you feel about the surgery?”.

A topic list or questionnaire may be adapted or improved in the course of the research, in line with the iterative nature of QRM. The more interviews you have done, the more you know and the more specific or detailed your questions can be (Mortelmans, 2009). However, continuity should be guarded. The topics of the first interview should also be represented in the following interviews, although the latter can also contain much more detailed questions.

For an example of a topic list and a semi-structured questionnaire, see Appendix 6 and Appendix 7 respectively.

What types of questions can be posed?[a]

The interview starts with an easy opening question which is mostly to set the interviewee at ease, break the ice and get to know each other. With this question the researcher does not expect to get a lot of useful information, the main function is to start up the conversation.

After that the conversation is started with a first general and easy to answer question addressing the content of the research. It can be an attitude question to enable the respondents to roll into the conversation. An example could be: “If you hear breast cancer screening, what are your first thoughts?”.

Next, transition questions involve the respondents in the research subject, for example through asking questions about personal experiences or specific behavior regarding the topic. Attitudinal questions are more difficult to answer and should therefore be addressed later in the interview. An example is “How did you experience your eye surgery?”.

Subsequently the key questions are addressed. These questions are the reason why the interview is done. The interviewer can make clear that the interviewee can take some time to answer these questions. An interview can count up to five key questions each taking up to fifteen minutes to answer them.

Finally, the interview is terminated by means of a concluding question and thanking the interviewee for his participation. Three types of concluding questions can be distinguished:

Summary questions provide the interviewee with a summary of what he has told the interviewer,Final questions can address elements that have not been mentioned during the interview, for example: “Do you want to add something to this interview?”. Make sure you allow enough time for the concluding questions.

It is useful to conduct a pilot (focus group) interview in order to test, assess and validate the format and the appropriateness of the topic guide or questionnaire.

3.1.1.4 How to run the data collection?

Preparations for the interview

Preparations for the interview encompass the recruitment of participants and the making of appointments, becoming knowledgeable about the research topic, including learning the interview guide by heart, anticipating questions of participants regarding the research project, access to a physical space where the interviews can take place and preparation of the recording equipment (Mack,2005). Well functioning of the recorders is crucial, so batteries, tapes and microphones should be carefully checked. It could be practical to foresee a second recorder as back-up. Finally also a notebook, a pen, and of course the topic list or interview guide you prepared for the interview should not be forgotten.

Box 2: What to take to the interview?

Equipment

- digital tape recorder (plus 1 extra, if available)

- Spare batteries

- Field notebook and pens

Interview packet

- 1 interview guide (in the appropriate language)

- informed consent forms (2 per participants: 1 for interviewer, 1 for each participant, in the appropriate language)

- Participant reimbursement (if applicable)

Source: Adapted from Mack, 2005

Running the interview

Informed consent should be obtained from each participant before starting the interview. Also permission should be asked to record the interview. Also it should be explained how the tapes will be used and stored.

The research aims should be briefly repeated. Probably the research aims were already explained during the first contact with the respondent in order to convince him of participating. Next, all the topics or questions on the checklist or questionnaire need to be addressed. Participants are probed for elaboration of their responses in order to learn everything they want to share about the research topic54. Mobile phones should be switched off during the interview so as not to imply that the participant’s testimony is of secondary importance.

During the interview back-up notes could be taken, the interviewee’s behaviors and contextual aspects of the interview should be observed and documented as part of the field notes. Field notes are expanded as soon as possible after each interview, preferably within 24 hours, while the memory is still fresh (Mack,2005).

To get deeper or redirect the discussion, probing techniques can be used:

- Repeat the question but in a different wording.

- Summarise the anwer the relevant aspects of the interviewee’s answer, in an interrogative way. For example: “In sum, you say that…?”

- Probe explicitly, for example: “What do you mean?” or “Could you give me a second example?”

- Purposive probing, for example: “Why was it that you?” or “What happened then?”

- Repeat the last couple of words in an interrogative way. For example: “R: (…) I think it is dangerous and I don’t trust doctors”. I: ”You don’t trust doctors?”

- Introduce a short silence.

- Verbalise emotions, for example: “I can see that thinking of that discussion makes you very angry.”

The interview is closed by thanking the participant(s).

3.1.1.5 How to prepare the data for analysis?

Transcribing is the procedure for producing a written version of the interview. Ideally, the information recorded during the interview will need to be transcribed in order to enable accurate data analysis. A transcript is a full written literal text of the interview. It often produces a lot of written text.

Good quality transcribing is not simply transferring words from the tape to the page. The wording communicates only a small proportion of the message. A lot of additional information is to be found in the way people speak. Tone and inflection, timing of reactions are important indicators too. With experienced observers and note-takers, a thematic analysis of the notes taken during the interviews could be used as a basis for analysis of the “non-verbal” aspects.

Transcribing is a time consuming and costly part of the study. The research team should consider in advance the question "who should do the transcribing”? Resources may be needed to pay an audio typist, a strategy usually more cost effective than a researcher. Be aware that “typists” are often unfamiliar with the terminology or language used in the interviews which can lead to mistakes and/or prolong the transcribing time.

It may not be essential to transcribe every interview. It is possible to use a technique known as tape and notebook analysis, which means taking notes from a playback of the tape recorded interview and triangulating them with the notes taken by the observers and note-takers. However, bias can occur if inexperienced qualitative researchers attempt tape and notebook analysis. It is certainly preferable to produce full transcripts of the first few interviews. Once the researcher becomes familiar with the key messages emerging from the data tape analysis may be possible. Transcripts are especially valuable when several researchers work with the same data.

3.1.1.6 What are the common pitfalls?

In the following paragraph we mention a number of common pitfalls typical for interviews. They are based on the work of Mortelmans (Mortelmans, 2009) and the Qualitative Research Guidelines Project (Cohen, 2008).

- The methodology needs to be transparent. Each step of the sampling, data collection and analysis should be described in sufficient detail, this means that it must enable other researchers to replicate the same study.

- The sample should be well constructed and described.

- Avoid dichotomous questions which elicit a yes or a no. In an interview we are especially interested in rich descriptions and we want the interviewee to talk a lot and elaborate on the topic of the question.

- Avoid double questions, for example: “Once you decided to have a screening, what was the next step? How did you proceed? How did it change the way you thought about potential risks?” The interviewee can not respond to all the questions at once and thus picks out one. This means the other questions are lost.

- Avoid the expression of value judgements or your own opinion, for example: “What do you think about the endless waiting times?” The word “endless” suggests irritation.

- Avoid to be suggestive, for instance by giving examples: “Which kind of difficulties did you encounter, like long waiting times, full waiting rooms etc?” This kind of examples provide the interviewee with a frame, which he will possibly not transcend. This way you loose what he would have answered spontaneously.

- Avoid a reverse of roles. The interviewee should not be asking you questions. An example could be: I: “What does it mean to you to be a patient?”, R: “I don’t know. What does it mean to you?”. If this happens you can say that you are willing to answer that question after the interview, but that you can not answer it during the interview in order not to influence the answers of the interviewee. A reverse of roles can be avoided if the interviewer introduces himself in a neutral way, for example as a researcher, but not as, for example a physician or an expert in an issue related to the topic/goal of the interview, in order for the respondent not to ask you too many questions on a particular condition or issue.

- Avoid letting the interviewee deviate to far from the topic or elaborates on irrelevant matters by returning to the question posed.

- Avoid being too jargony, but use a familiar terminology which does not need explications or definitions.

- The analysis should not be superficial but really in-depth. However it may not transcend the data. The data must always support the results.

3.1.2 Individual interviews

3.1.2.1 What are individual semi-structured interviews?

Interviews are used in many contexts (journalism, human resource managers, etc.) and for many purposes (entertainment, recruitment of personnel, etc.), hence scientific data collection is only one very specific application, which should not be confused with other applications. The interview is easily trivialized as it is common practice in the media landscape which surrounds us. Fontana and Frey even speak about “the interview society” according to Atkinson and Silverman. Practicing health professionals routinely interview patients during their clinical work, and they may wonder whether simply talking to people constitutes a legitimate form of research (DiCicco-Bloom et al,2006). In qualitative research, however, interviewing is a well established research technique and two types can be distinguished: semi-structured and unstructured. Structured interviews are out of scope here, because they consist of administering structured questionnaires producing quantitative data.

Unstructured interviews are more or less equivalent to guided conversations(DiCicco-Bloom et al,2006). Originally they were part of ethnographers’ field work, consisting of participant observation and interviewing key informants on an ongoing basis to elicit information about the meaning of observed behaviors, interactions, or artifacts(DiCicco-Bloom et al,2006). There is no list of questions, nor an interview guide, the questions asked are based on the responses of the interviewee, as in the natural flow of a conversation (Britten, 1995).